This post is the first of a few, on Networking for Dell EMC’s new MX7000 Modular Chassis Platform. Dell EMC PowerEdge MX is a completely redesigned modular solution, built from the grounds up on the tenets of “Composability”, what Del EMC call “Kinetic” infrastructure – the dis-aggregation of compute, storage and networking fabric resources into shared pools, that can be available on-demand in a “Service-centric” Model. It is a successor to the MX1000e platform, which is around 10 years old at this point.

There are multi-layered innovations at work here, on multiple axis – The compute, storage and network resources are Composable and Elastic, Scalable and Responsive. The infrastructure itself offers simplified + unified, Automation and Control. It is future ready, with multi-generational support for CPUs and Connectivity.

Components

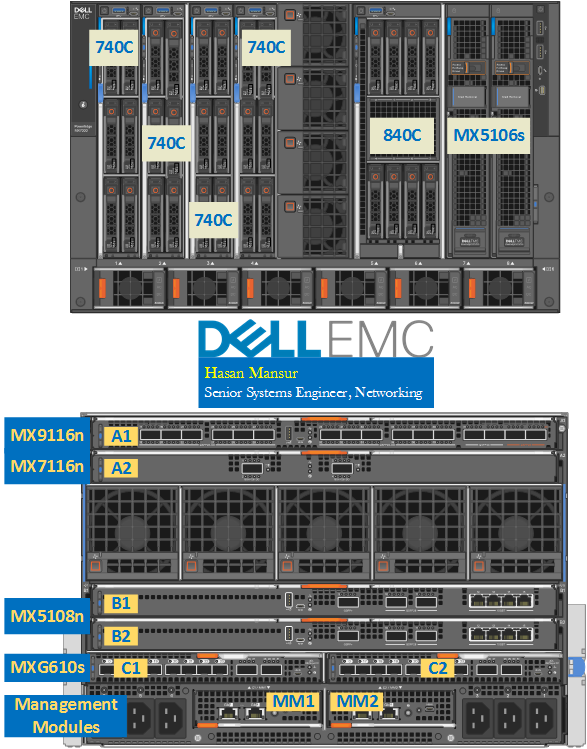

As the focus of this post is MX Networking, I shall not spend too much discussing the other components. In summary, the 7U height, 8-slot width modular chassis, offers at launch:

- Compute Sleds

- 2 Socket, Single Width – MX740C

- 4 socket, Double Width – MX840C

- Storage Sled

- Single width (Direct attach SAS) MX5016.

- Networking

- I/O Fabrics – 3 Fabrics, 2 Modules per fabric

- Fabric A & B : General Purpose – Ethernet & Future I/O

- Fabric C : Storage – FC & SAS

- Switches & Pass-through

- Ethernet

- Switches

- MX9116n

- Mx7116n (Expander Module used with MX9116n)

- MX5108n

- Pass-Through

- 10G Base-T

- 25G SFP+

- Switches

- FC Switch

- MXG610

- SAS Switch

- MX5000s

- Ethernet

- I/O Fabrics – 3 Fabrics, 2 Modules per fabric

- Management Module

- MX9002m

The figure below depicts Fabric Switching Engine MX9116 in Fabric A, Slot A1. Slot A2 is occupied by an MX7116 Expander Module. Fabric B has slots B1 and B2, occupied by an MX5108 Switch. Fabric C has slots C1 and C2 consumed by the MXG610s FC Switch.

I will quickly discuss the Management Modules, before starting with the Network IOMs. we will revisit Management again once we have covered some of the other bits, which have a bearing on it.

Management

The image above depicts 2x Management modules at the bottom of the chassis, rear view. These are the MX9002m modules, which host the Open Manage Enterprise – Modular Console. The module is made up of two elements

- Management Services Module

- Enclosure Controller

MSM mounts on to the EC board. It is actually MSM that hosts the OME-M Console. EC meanwhile, has the responsibility for power, cooling etc. It also owns the Ethernet ports which are externally exposed on the Management Module, plus the Management Ethernet Fabric (Fabric D). There are 2x EC modules supported on the chassis.

This OME-M console is used for the End-to-end life-cycle management of servers, storage, and networking in the chassis. From it, we can create Templates/Profiles for the servers containing FC/Ethernet parameters on the NICs etc. Each chassis supports 2x of these modules, for redundancy. Each module in turn supports 2x Ethernet ports, for redundancy as well as unifying management in a multi-chassis design. In a multi-chassis design, any chassis can be the Management Lead. All Network modules are managed from the Lead Chassis. Currently, a single Multi-Chassis Management domain can span 20 Chassis – The chassis themselves could be grouped into multiple scalable fabrics.

In subsequent posts, we shall discuss the Switch operating modes – Smart Fabric & Full Switch. However, a quick mention here of the Management Aspect.

All Network IO Modules unify themselves in a Smart Fabric Management Cluster. Even when we operate switches in Full Switch Mode, the management still executes/simulates a Smart Fabric Management End point. Similar to the Smart Fabric operation I have described in my dedicated post on Smart Fabric Services, the cluster presents a single Rest API end point to OME-M Console, to centrally manage all Networking IOMs in a Multi-chassis group. As mentioned, a Multi-Chassis Management Group can currently comprise of upto 20 chassis.

Ofcourse, all of this assumes the use of OS10, of which Smart Fabric Services is a feature. Support for select Dell EMC ON/SND EcoSystem solutions on these modules, is a roadmap item. However, in their case, Smart Fabric Management Cluster will no longer apply as SFS is a feature exclusive to Dell EMC OS10.

Networking & I/O

Midplane

MX7000 employs Orthogonal connections (except for fabric C), which eliminate the need for a midplane. Right away, this benefits us by eliminating

- A point of failure in the design

- Mid-plane upgrade requirements when adopting future I/O

The elimination of the mid plane also aligns naturally, with the vision of realizing absolute composability via dis-aggregated components – down to FPGAs, GPUs, memory etc.

As you might have noted, Fabric A and B run the width of the chassis, whereas Fabric C is side by side. This means that the (Rear, Horizontal) IO Modules in Fabric A and B, can have their connectors mate directly, with the Mezzanine (A & B) cards in the (Front, Vertical) compute sleds – via the orthogonal connectors. A single width compute sled supports up to two Mezzanine cards (one each for fabrics A & B). A double width compute sled supports up to four.

Since Fabric C is side by side, it does need a mid plane. The IOMs in Fabric C plug directly into the Main Distribution Board, using three right angle connectors. While this fabric is primarily focused on Storage via FC or SAS, other interconnects types can infact use it in the future. For now, the SAS switching option can provide connectivity to internal SAS Storage sled MX5016. External options may become available in the future. The FC option provides connectivity to external FC SAN/Storage.

MX7000 IO Fabrics

- Fabric A and B are General purpose. In the predecessor M1000e, all three fabrics were general purpose.

- Fabric C is Storage focused. While it can support 8/16/32 G today, the midplane in Fabric C is capable of supporting 128G in the future. Similarly, while the connectivity today supports SAS and FC, other interconnects are possible in the future.

MX7000 IO Modules

A quick overview of the IO Modules available for MX7000.

Switches

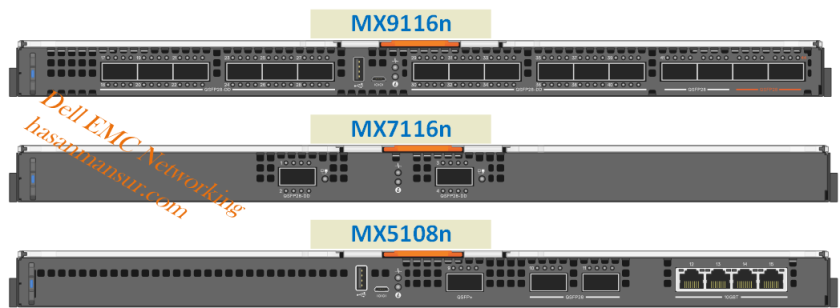

- MX9116n:

- Fabric Switching Engine. A Broadcom Tomahawk+ ASIC with <450 ns latency, support for QSFP28-DD (2x100G) ports, 6.4 Tbps Switching Fabric with 3.2 Bpps Forwarding capacity.

- 16x 25GbE internal ports, 12x [2×100] GbE QSFP28-DD Fabric Expansion ports, 2x 100GbE QSFP28 uplink ports, 2x 100GbE/8x 32G FC Unified ports.

- MX7116n:

- Fabric Expander Module.

- 16x 25GbE internal ports. 2x [2x 100] GbE QSFP28-DD Fabric Expansion Ports.

- Of the two QSFP28-DD ports, the right most port is reserved for future use once Quad port NICs become available, and is not utilized for now.

- MX5108n:

- Ethernet Switch. Broadcom Maverick ASIC with <800 ns latency, 960 Gbps Switching Fabric with 720 Mpps Forwarding capacity.

- 8x 25GbE internal ports, 2x 100GbE QSFP28 uplink ports, 1x 40G QSFP+ port, 4x 10GbT ports.

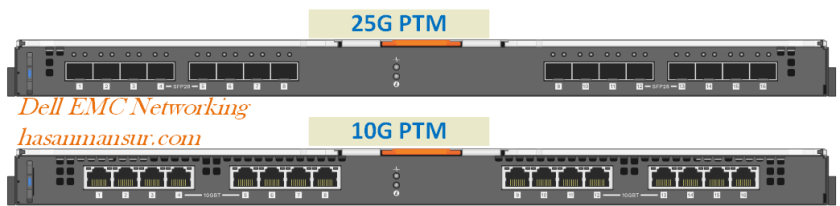

Pass Through Modules

- 25G PTM

- 16x 25GbE internal ports. 16x SFP28 25GbE uplink ports.

- 10G PTM

- 16x 10GbE internal ports. 16x Base-T 10GbE uplink ports.

Storage Switching

- MXG610s

- Gen6 FC Switch. A Brocade GoldenEye4 ASIC with < 0.9 us latency, 1 Tbps Switching Fabric.

- 16x 32G FC internal ports, 8x 32G FC external SFP+ ports, 2x 100GbE/8x 32G FC uplink ports.

- MX5000s

- x4 SAS internal connections, to all 8 front facing slots in MX7000. 24 Gbps SAS Switching between internal storage (MX5106n) and compute sleds. External options may become available in the future, so while there are 6x external SAS ports exposed at the moment, these are not active.

MX9116n port Characteristics

A few quick notes regarding the MX9116 ports, as this would be relevant for the design discussion in the next post.

- All Internal ports (1/1/1 – 1/1/16) are enabled by default.

- (Current) Servers with Dual port NICs connect to internal ports which are numbered Odd. When Quad port NICs become available, the even numbered ports will be utilized as well.

- Of the 16 external facing ports,

- Port Group 1-9 (QSFP28-DD) is by default, in Fabric Expander mode, and used to connect to MX7116 Expanders.

- Port Group 10-12 (QSFP28-DD) is by default, in 2x 100GbE Breakout Mode.

- Port Group 13-14 (QSFP28) These Single Density (vs. Double Density ports 1-12) are by default, in 100GbE mode. They allow breakout configs including 10, 25, 40, 50, 100G

- Port Group 15-16 is Unified Ports. They operate Either as Ethernet, Or as FC. you cannot have individual ports wihtin a pair, operate in different modes. by default, they are set to 1x 100GbE.

- MX9116 has 12x QSFP28-DD ports. In subsequent posts, we will read about Scalable Fabric Architecture. For now, just note that if scalable fabric is to expand to (max) 10 chassis:

- 9 of these ports are consumed for FSE<>FEM

- 2 of these ports are consumed for VLTi

- 1 port is available for user needs.

- This is apart from the 2x single density QSFP28 ports available for uplinks, as well as the 2x unified ports.

In the next post, we look at Operating Modes & IOM Selection, followed by subsequent post(s) on Connectivity and Architecture with the MX7000 Modular Chassis.

Excellent post Hasan.

Minor typo. IOMs should say ‘horizontal’ and sleds should say ‘vertical’.

As you might have noted, Fabric A and B run the width of the chassis, whereas Fabric C is side by side. This means that the (Rear, Vertical) IO Modules in Fabric A and B, can have their connectors mate directly, with the Mezzanine (A & B) cards in the (Front, horizontal) compute sleds – via the orthogonal connectors.

You are absolutely correct Shashwat !

Thanks for pointing this out, I shall amend.

And Thanks for the compliment. much apprecaited !

hey hasan,

Justin here 🙂 hope you are well my friend it has been years since we last spoke 🙂 These pages are awesome! I came across these while upskilling to deliver MX7000 training. I’m a bit bamboozled with the networking side of the MX so big thank you for the networking guide!

Justin,

It is so good to hear from you, must be 10 years or more since we last worked together.

Thanks a bunch for your appreciation ! I have not been able to post new content of late as my schedule is just too hectic now.

If you have questions around MX Networking, drop me an email/message internally and we can schedule a call to discuss any aspects. I will be happy to help.

Hasan